(Note: If you are here to play with the cool interactive plots and want to skip the lengthy chit chat, scroll down!)

Now that the NBA regular season is over and the playoffs are well under way, I thought I’d share some data analysis I did recently about the largest comebacks in NBA history.

The idea of looking at this came up this past March, during a conversation with Tommy Powers from UW (who will be spending the summer with us as an intern) at ICASSP 2017 in New Orleans. The Boston Celtics at Golden State Warriors game was for some strange reason shown on TV in a bar where we were listening to some great blues band. The Celtics were ahead in Q4 and ended up winning with a large margin, but we were wondering at which point it would become hopeless for the Warriors to stage a comeback.

I thought it’d be fun to have a plot that showed, for any given time in a game, the largest score deficit that a team was in and still ended up winning. For example, at half-time, what was the largest score deficit that a team ended up overcoming? You could imagine the curve starting at 0 at the beginning of the game (games usually start at 0-0), going down as minutes passed to some minimum, and then creeping back up, because with less time left to play, it becomes harder and harder to overcome a large deficit and win.

I poked around on the internet for play-by-play data logs, and thought I’d have to write a scraper to get the data from some sports website, when I stumbled upon some reddit thread mentioning that stats.nba.com had an API from which the data could be accessed. Further googling with the magic word (“github”) quickly showed that (of course!) several people had already written some Python wrapper to do the heavy lifting. I decided to use statsnba-playbyplay, as it seemed to have the appropriate features.

A few (read: way too many) hours later, I was able to get the plots I wanted. They only cover the seasons from 1996-97 to 2016-17, because play-by-play data is not available for earlier seasons. I also arbitrarily decided to only consider regular season games, and to not show overtime periods (of which I realized some games had many!).

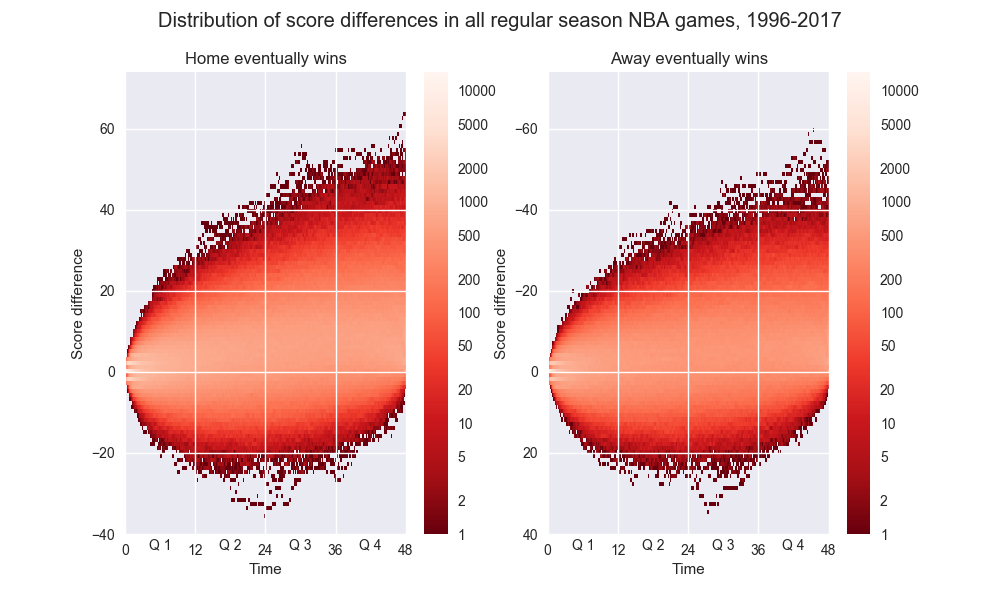

Without further ado, here are the results for the largest comebacks overall from 1996 to 2017, with separate charts for games where the home team eventually wins and games where the away team eventually wins. You can hover over the plots (made with plot.ly) to see the list of games that correspond to the largest comebacks at each second.

So the Utah Jazz were able to overcome a 36 point deficit against the Denver Nuggets in 1996, at home. Conveniently, the largest deficit occurred at half-time. The largest comeback for an away team goes to the Sacramento Kings, who ended up beating in 2009 the Chicago Bulls, in Chicago, even though the Bulls were 35 points ahead after 3 and half minutes in the third quarter: the crowd must not have been pleased!

Here are plots showing each season separately (this may take some time to load):

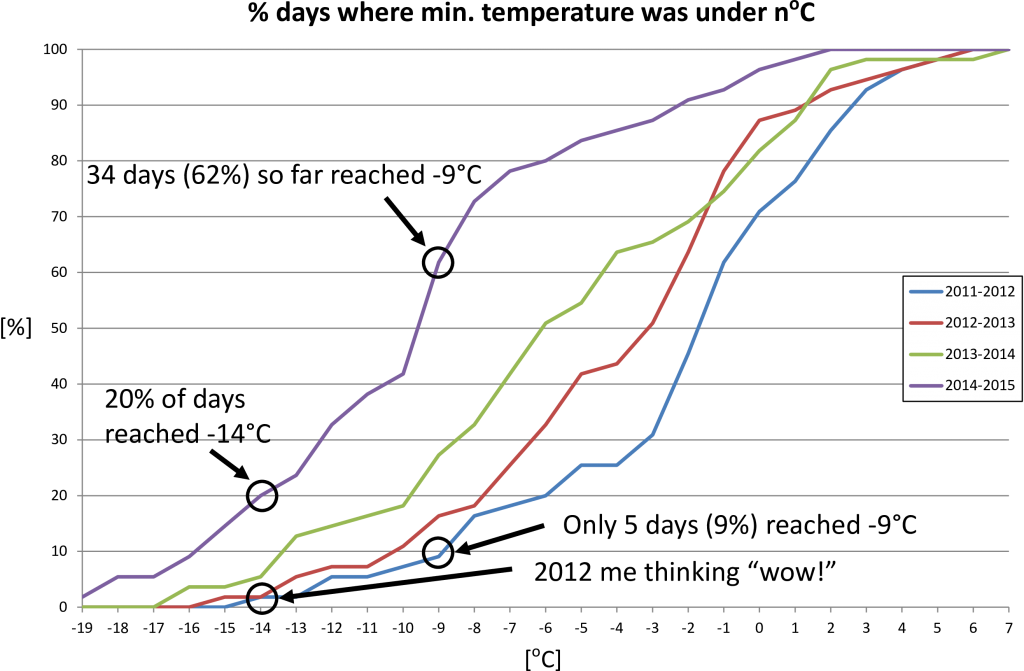

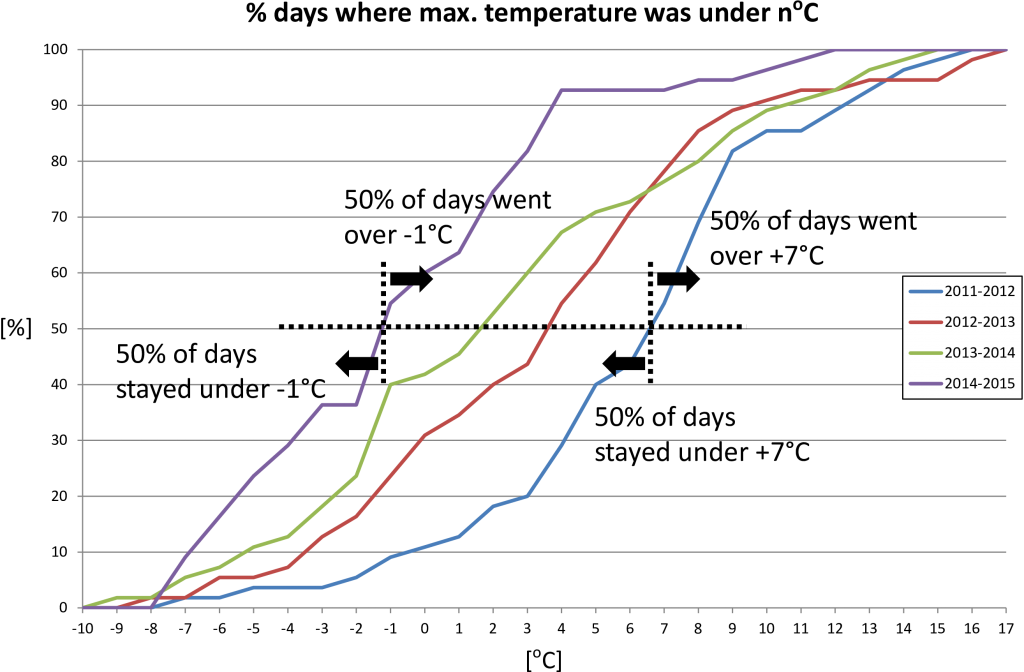

It’s pretty clear by comparing all seasons how the Nuggets at Jazz and Kings at Bulls games were outliers. We can also look at the distribution of scores in all games from 1996 to 2017, to show how rare such large comebacks are:

| Score | Game | Date | Time of largest score deficit | ||

|---|---|---|---|---|---|

| Season | Home / Away | ||||

| 1996-97 | Home | -36 | Denver Nuggets at Utah Jazz | Wednesday, November 27, 1996 | Q2 11’40” to Q2 11’56” |

| Away | -27 | Phoenix Suns at Dallas Mavericks | Sunday, March 2, 1997 | Q3 8’51” to Q3 9’32” | |

| 1997-98 | Home | -24 | Chicago Bulls at Utah Jazz | Wednesday, February 4, 1998 | Q2 0’47” to Q2 2’28” |

| Away | -24 | Minnesota Timberwolves at Dallas Mavericks | Saturday, January 17, 1998 | Q3 6’7” to Q3 6’20” | |

| 1998-99 | Home | -23 | Houston Rockets at San Antonio Spurs | Sunday, April 18, 1999 | Q2 1’4” to Q2 1’20” |

| Away | -28 | Los Angeles Lakers at Golden State Warriors | Tuesday, April 20, 1999 | Q2 2’22” to Q2 7’42” | |

| 1999-00 | Home | -22 | San Antonio Spurs at Dallas Mavericks | Tuesday, March 21, 2000 | Q2 10’28” to Q2 11’39” |

| Away | -23 | Sacramento Kings at Los Angeles Clippers | Saturday, March 18, 2000 | Q2 3’29” to Q2 3’40” | |

| 2000-01 | Home | -24 | Miami Heat at Sacramento Kings | Sunday, December 10, 2000 | at Q2 0’0” |

| Away | -28 | Sacramento Kings at Phoenix Suns | Wednesday, March 7, 2001 | Q2 8’36” to Q2 8’46” | |

| 2001-02 | Home | -23 | Charlotte Hornets at New Jersey Nets | Sunday, February 24, 2002 | Q3 3’21” to Q3 3’25” |

| Away | -25 | Memphis Grizzlies at Portland Trail Blazers | Monday, March 25, 2002 | Q3 7’14” to Q3 8’39” | |

| 2002-03 | Home | -30 | Dallas Mavericks at Los Angeles Lakers | Friday, December 6, 2002 | Q3 0’40” to Q3 0’57” |

| Away | -23 | Los Angeles Lakers at Memphis Grizzlies | Friday, April 4, 2003 | Q4 0’0” to Q4 0’13” | |

| Away | -23 | Boston Celtics at Philadelphia 76ers | Monday, January 20, 2003 | Q3 1’10” to Q3 1’31” | |

| 2003-04 | Home | -25 | New Orleans Hornets at Cleveland Cavaliers | Monday, February 23, 2004 | Q2 4’15” to Q2 5’34” |

| Away | -29 | Phoenix Suns at Boston Celtics | Friday, December 5, 2003 | Q3 0’23” to Q3 0’49” | |

| 2004-05 | Home | -22 | Washington Wizards at Toronto Raptors | Friday, February 4, 2005 | Q3 3’40” to Q3 4’13” |

| Away | -24 | Los Angeles Clippers at Chicago Bulls | Saturday, November 13, 2004 | Q2 5’30” to Q2 5’41” | |

| 2005-06 | Home | -25 | Charlotte Bobcats at Chicago Bulls | Wednesday, November 2, 2005 | Q3 3’22” to Q3 3’28” |

| Home | -25 | Boston Celtics at Miami Heat | Thursday, March 16, 2006 | Q2 8’37” to Q2 9’24” | |

| Away | -19 | Los Angeles Clippers at Golden State Warriors | Monday, January 23, 2006 | Q3 8’10” to Q3 8’36” | |

| Away | -19 | Philadelphia 76ers at Minnesota Timberwolves | Sunday, January 22, 2006 | Q3 10’2” to Q3 10’20” | |

| 2006-07 | Home | -27 | New Orleans/Oklahoma City Hornets at Portland Trail Blazers | Friday, November 10, 2006 | Q2 0’20” to Q2 0’24” |

| Away | -25 | Seattle SuperSonics at Minnesota Timberwolves | Tuesday, March 27, 2007 | Q3 6’4” to Q3 6’17” | |

| 2007-08 | Home | -25 | Portland Trail Blazers at Philadelphia 76ers | Friday, November 16, 2007 | Q2 8’20” to Q2 8’35” |

| Away | -25 | Denver Nuggets at Indiana Pacers | Saturday, November 10, 2007 | Q2 6’36” to Q2 6’48” | |

| 2008-09 | Home | -29 | Minnesota Timberwolves at Dallas Mavericks | Tuesday, December 30, 2008 | Q3 1’34” to Q3 2’10” |

| Away | -26 | Philadelphia 76ers at Indiana Pacers | Friday, November 14, 2008 | Q2 0’26” to Q2 0’30” | |

| 2009-10 | Home | -24 | Phoenix Suns at Indiana Pacers | Wednesday, January 13, 2010 | Q2 5’51” to Q2 5’58” |

| Away | -35 | Sacramento Kings at Chicago Bulls | Monday, December 21, 2009 | Q3 3’10” to Q3 3’26” | |

| 2010-11 | Home | -23 | Sacramento Kings at New Orleans Hornets | Wednesday, December 15, 2010 | Q3 3’12” to Q3 4’8” |

| Away | -25 | Toronto Raptors at Detroit Pistons | Saturday, December 11, 2010 | Q3 6’9” to Q3 6’50” | |

| 2011-12 | Home | -21 | Milwaukee Bucks at Sacramento Kings | Thursday, January 5, 2012 | Q2 10’1” to Q3 1’4” |

| Home | -21 | Los Angeles Lakers at Washington Wizards | Wednesday, March 7, 2012 | Q3 4’37” to Q3 4’49” | |

| Away | -27 | Boston Celtics at Orlando Magic | Thursday, January 26, 2012 | Q2 8’49” to Q2 8’57” | |

| 2012-13 | Home | -27 | Boston Celtics at Atlanta Hawks | Friday, January 25, 2013 | Q2 5’59” to Q2 6’14” |

| Away | -27 | Miami Heat at Cleveland Cavaliers | Wednesday, March 20, 2013 | Q3 4’16” to Q3 4’47” | |

| Away | -27 | Milwaukee Bucks at Chicago Bulls | Monday, November 26, 2012 | at Q3 9’10” | |

| 2013-14 | Home | -27 | Toronto Raptors at Golden State Warriors | Tuesday, December 3, 2013 | Q3 2’40” to Q3 2’48” |

| Away | -25 | Indiana Pacers at Detroit Pistons | Saturday, March 15, 2014 | Q2 8’36” to Q2 8’56” | |

| 2014-15 | Home | -26 | Sacramento Kings at Memphis Grizzlies | Thursday, November 13, 2014 | Q2 1’14” to Q2 2’1” |

| Away | -26 | Golden State Warriors at Boston Celtics | Sunday, March 1, 2015 | Q2 5’7” to Q2 5’19” | |

| 2015-16 | Home | -26 | Miami Heat at Boston Celtics | Wednesday, April 13, 2016 | Q2 11’1” to Q2 11’55” |

| Away | -24 | Chicago Bulls at Philadelphia 76ers | Thursday, January 14, 2016 | Q2 5’38” to Q2 5’55” | |

| 2016-17 | Home | -28 | Sacramento Kings at San Antonio Spurs | Wednesday, March 8, 2017 | Q2 7’18” to Q2 7’26” |

| Away | -24 | Memphis Grizzlies at Golden State Warriors | Friday, January 6, 2017 | Q3 6’44” to Q3 7’19” |

If you feel like playing with the data, I put both the code and the data on github. Here is how the code looks like:

That’s it!

). For future updates, please check

). For future updates, please check